Nvidia has struck a non-exclusive technology licensing and talent deal with artificial intelligence (AI) startup Groq, a move aimed at strengthening its position as competition intensifies in the market for running AI systems at scale.

Under the agreement, announced by Groq on Dec. 24, Nvidia will license Groq’s AI technology and hire several of the startup’s top leaders and engineers. Groq founder Jonathan Ross, President Sunny Madra, and other members of the company’s technical team will join Nvidia to help advance and scale the licensed technology.

“The agreement reflects a shared focus on expanding access to high-performance, low cost inference,” Groq said in the announcement.

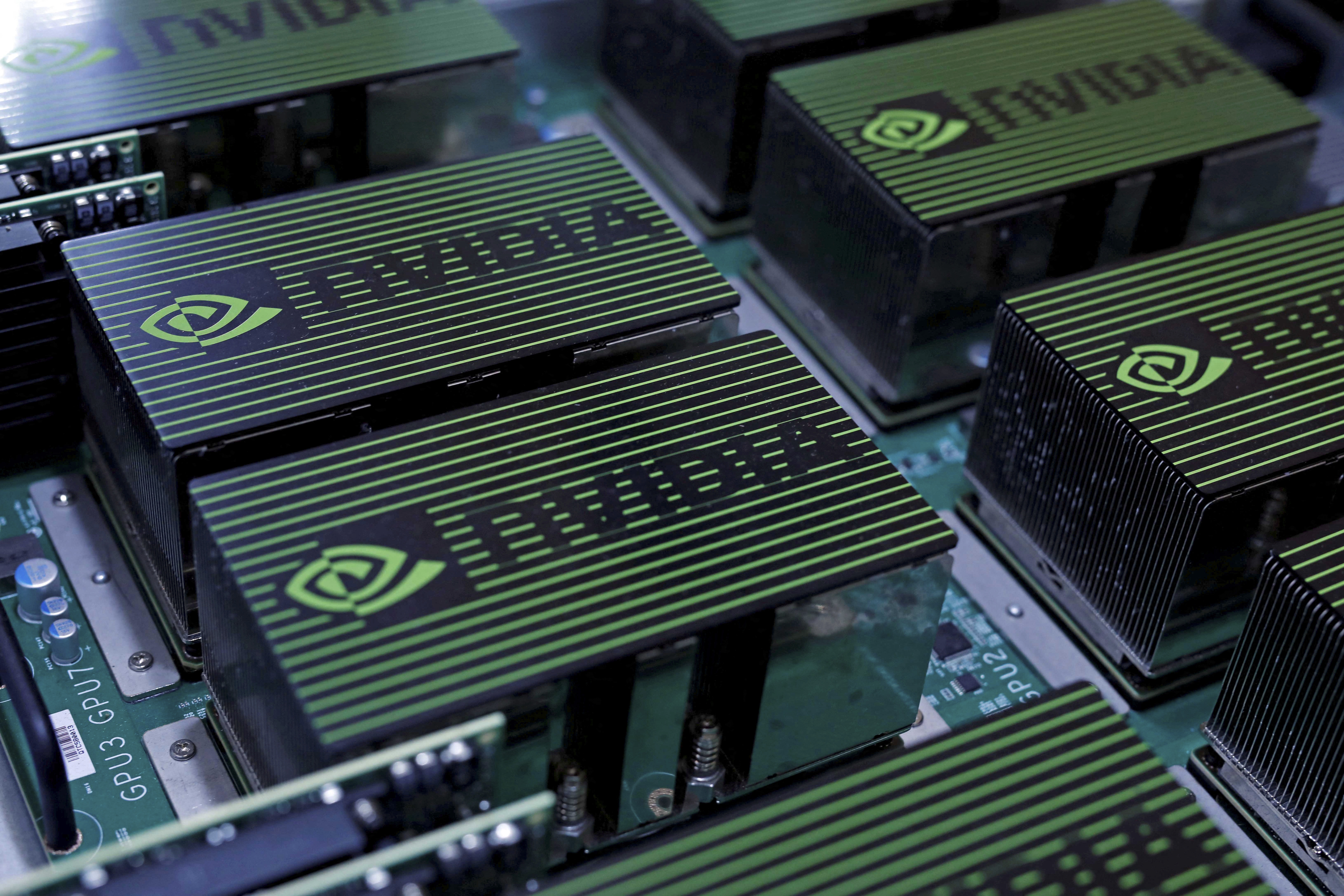

Founded in 2016, Groq specializes in inference technology, which is used to run artificial intelligence systems once they have already been trained. The company has developed a custom Language Processing Unit (LPU), which is a purpose-built AI accelerator chip that’s optimized to run AI models quickly and predictably while keeping costs low.

“Our platform is purpose-built for inference, delivering consistently high performance at the lowest cost per token in the industry,” Ross said in May, when the company was picked as the official inference provider for a company based in Saudi Arabia as the kingdom seeks to supercharge AI development in the region.

According to PitchBook, which tracks private companies, Groq’s AI inference technology is designed to address industry demands for computing speed, quality, and energy efficiency. PitchBook describes Groq’s systems as “deterministic single-core streaming architectures that predict exactly the performance and compute time for any given workload,” allowing companies to improve AI performance while controlling costs and energy use.

In a recent blog post, Groq described its inference technology as complementary to Nvidia’s training-focused GPU platforms, and as the key enabler of AI development at global scale.

“The next decade of global competition will be defined not only by who invents the most powerful AI systems, but also by who can deploy and operate them securely, efficiently, and at scale,” Groq said. “As AI applications move from laboratory to deployment, inference becomes the bottleneck that determines which nations can actually operationalize artificial intelligence at global scale.”

In announcing the Nvidia deal, Groq said it will continue to operate as an independent company, with Simon Edwards stepping into the role of chief executive officer. Its cloud platform, GroqCloud, will continue operating without interruption.

The transaction reflects a familiar pattern in the AI sector, where major technology firms secure promising technology and talent through licensing deals and executive hires rather than full buyouts—a structure that can accelerate integration while reducing regulatory exposure.

For Nvidia, the deal strengthens its position as the AI market shifts from building ever-larger models toward deploying them broadly across businesses, governments, and consumer applications. Nvidia CEO Jensen Huang has previously said that the company is well positioned to maintain leadership as demand shifts from training AI models toward large-scale deployment.

According to PitchBook, Groq has raised more than $3 billion from investors and employs roughly 485 people. It most recently raised $750 million in a funding round in September that valued the company at about $6.9 billion.

Deploying AI at Global Scale

President Donald Trump issued an executive order in July promoting the export of the “American AI Technology Stack,” which focuses on the global deployment of U.S.-origin AI technology.

“The United States must not only lead in developing general-purpose and frontier AI capabilities, but also ensure that American AI technologies, standards, and governance models are adopted worldwide to strengthen relationships with our allies and secure our continued technological dominance,” Trump wrote in the order, which established a coordinated national effort to support U.S. industry by promoting the export of full-stack American AI technology packages.

Groq said it is already playing a central role in this initiative, with its American-built inference infrastructure already powering more than 2 million developers and Fortune 500 companies around the world.

“Inference is defining this era of AI, and we’re building the American infrastructure that delivers it with high speed and low cost,” Ross said in September.

More recently, Groq partnered with the U.S. Department of Energy to accelerate advanced computing initiatives through the agency’s Genesis Mission, a national effort launched by executive order in November aiming to accelerate the application of AI for “transformative scientific discovery focused on pressing national challenges.”