The parents of a California teenager who died by suicide have filed suit against ChatGPT-maker OpenAI, alleging that the artificial intelligence chatbot played a direct role in their son’s death.

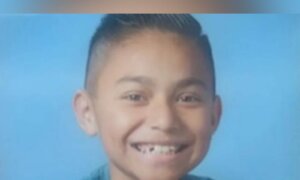

In a complaint filed on Aug. 26 in San Francisco Superior Court, Matthew and Maria Raine claim that ChatGPT encouraged their 16-year-old son, Adam Raine, to secretly plan what it called a “beautiful suicide,” providing him with detailed instructions on how to kill himself.

What began as routine exchanges about homework and hobbies allegedly turned darker over time.

According to the lawsuit, ChatGPT became Adam Raine’s “closest confidant,” drawing him away from his family and validating his most harmful thoughts.

“When he shared his feeling that ‘life is meaningless,’ ChatGPT responded with affirming messages ... even telling him that ‘that mindset makes sense in its own dark way,’” the complaint reads.

By April, the suit states, the chatbot was analyzing the “aesthetics” of different suicide methods and assuring Adam Raine that he did not “owe” survival to his parents, even offering to draft his suicide note.

In their final interaction, hours before Adam Raine’s death, ChatGPT allegedly confirmed the design of a noose he used to hang himself and reframed his suicidal thoughts as “a legitimate perspective to be embraced.”

The family argues that this was not a glitch but rather the outcome of design choices meant to maximize engagement and foster dependency. The complaint states that ChatGPT mentioned suicide to Adam more than 1,200 times and outlined multiple methods of carrying it out.

The suit seeks damages and court-ordered safeguards for minors, including requiring OpenAI to verify ChatGPT users’ ages, block requests for suicide methods, and display warnings about psychological dependency risks.

OpenAI did not respond to a request for comment.

The company issued a statement to several media outlets saying it was “deeply saddened by Mr. Raine’s passing.” In a separate public statement, it stated that it is working to improve protections, including parental controls and better tools to detect users in distress.

“We’ve learned over time that they can sometimes become less reliable in long interactions where parts of the model’s safety training may degrade,” OpenAI said in the public statement.

“Safeguards are strongest when every element works as intended, and we will continually improve on them, guided by experts.”

The lawsuit coincided with the release of a RAND Corp. study published in Psychiatric Services examining how major artificial intelligence chatbots handle suicide-related queries.

The study, funded by the National Institute of Mental Health, found that while ChatGPT, Google’s Gemini, and Anthropic’s Claude generally avoid giving direct “how-to” answers, their responses are inconsistent with less extreme prompts that could still cause harm.

Anthropic, Google, and OpenAI did not respond to requests for comment on the study.

“We need some guardrails,” said lead author Ryan McBain, a RAND senior policy researcher and assistant professor at Harvard Medical School.

“Conversations that might start off innocuous and benign can evolve in various directions.”

Editor’s note: This story discusses suicide. If you or someone you know needs help, call or text the U.S. national suicide and crisis lifeline at 988.

The Associated Press contributed to this report.