Access to complete, factual, and unbiased information has long been considered a cornerstone of a free society.

Yet, as a surging volume of information is held by private companies—including social media and artificial intelligence (AI) companies—and by the federal government, concerns about privacy and the freedom of information have also grown.

We asked readers to weigh in on AI regulation and, more generally, on the public’s right to know.

An unusually high percentage of the 8,604 respondents to our Dec. 3 poll affirmed that information should be freely and transparently provided to the public. However, respondents did acknowledge some exceptions.

AI Transparency

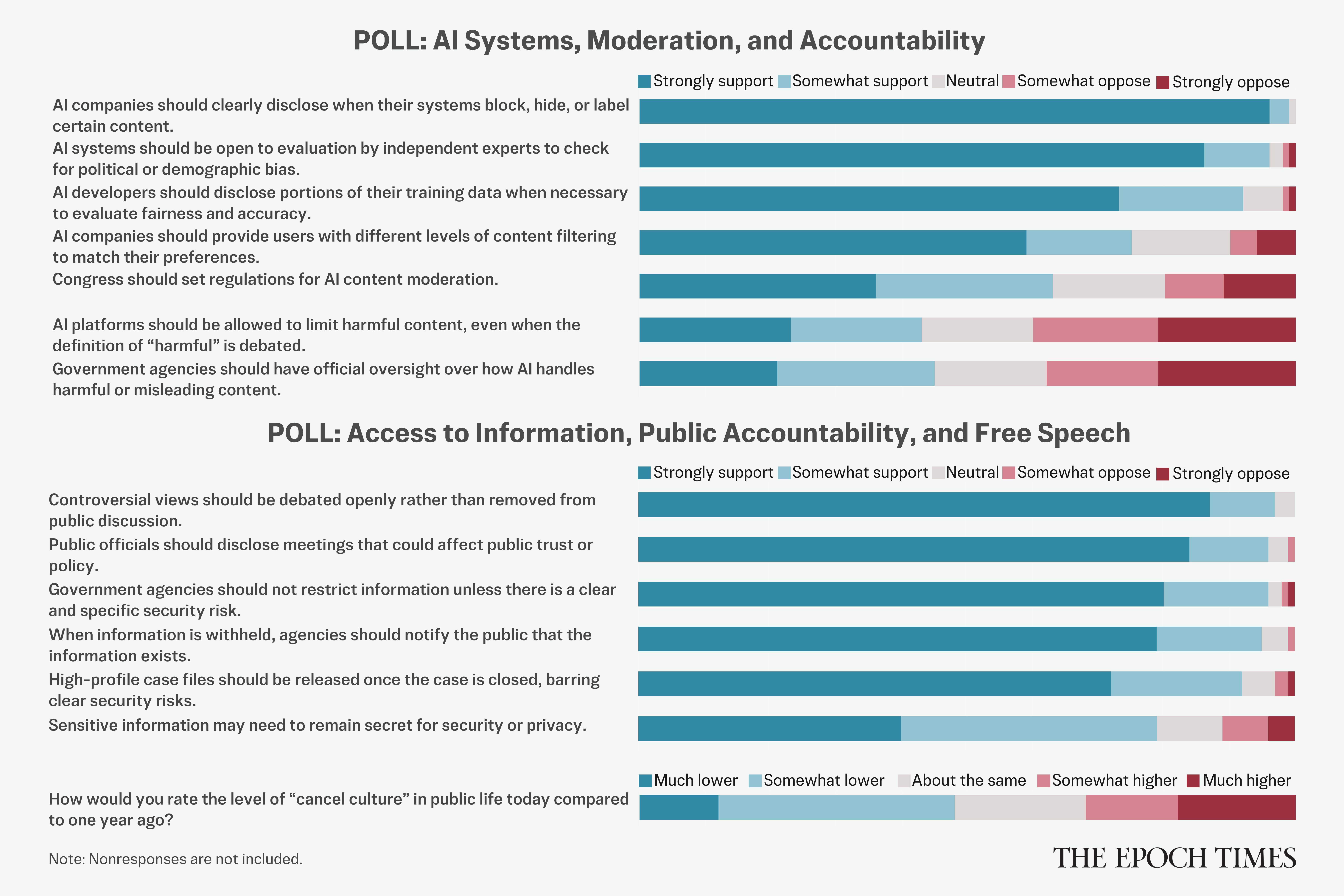

Respondents were nearly unanimous in their belief that AI companies should practice transparency about how information is developed and when it is withheld from the public.

A whopping 99 percent of respondents agreed that AI companies should clearly disclose when their systems block, hide, or label certain content. Ninety-six percent of respondents strongly agreed, and just 1 percent were neutral.

Readers also agreed (96 percent) that AI systems should be open to evaluation by independent experts to check for political or demographic bias. Eighty-six percent strongly agreed, and just 2 percent were opposed to the idea.

People look at their smartphones in New York City, on May 8, 2019. (Reuters/Mike Segar)

Despite the economic benefits of AI, it does pose some dangers.

One is that it can introduce bias into the flow of information, due to the way AI “thinks.” AI systems are created largely by taking in vast amounts of written information, from which they “learn” to predict the next word in a sequence. Large language models (LLMs), the type of AI that powers a wide variety of applications including chatbots, learn patterns in their text and generate language following those patterns.

However, that means the AI system cannot think beyond the data that it was “trained” on.

Readers agreed in similar numbers that AI developers should disclose portions of their training data when necessary to evaluate fairness and accuracy. Ninety-two percent affirmed this, 73 percent strongly, and just 2 percent disagreed.

Despite their strong desire to see AI information presented openly, three-quarters of respondents (75 percent) believed AI companies should provide users with different levels of content filtering to match their preferences.

We found exceptionally high agreement that corporations and governments should be transparent in their handling of information, protecting the public’s right to know.

At the same time, readers acknowledged that some things should be kept secret.

AI Moderation

Readers were less dogmatic on questions related to government regulation of AI companies.

Sixty-three percent said Congress should set regulations for AI content moderation, but just over one-third (36 percent) felt strongly about that. Some 20 percent disagreed with the idea.

Opinion was nearly even on whether AI platforms should be allowed to limit harmful content, even when the definition of “harmful” is debated. Forty-three percent agreed that such information should be limited, and 40 percent were opposed.

Readers were only slightly more inclined to say that government agencies should have official oversight over how AI handles harmful or misleading content. A plurality of 45 percent agreed that this should be the case, but as many people felt strongly negative about it, as felt strongly positive about it.

Information Held by Government

Readers voiced strong opinions on how the government should handle information.

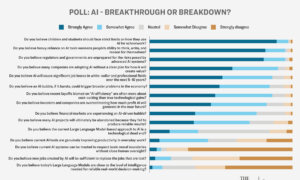

Ninety-seven percent agreed that controversial views should be debated openly rather than removed from public discussion. Less than 1 percent of respondents disagreed.

The Chat GPT app icon is seen on a smartphone screen in Chicago, on Aug. 4, 2025. (AP Photo/Kiichiro Sato)

Similarly, 96 percent agreed that public officials should disclose meetings that could affect public trust or policy. Just 1 percent were somewhat opposed.

Overall agreement remained high that government agencies should not restrict information unless there is a clear and specific security risk (96 percent), and that when information is withheld, agencies should notify the public that the information exists (95 percent).

On those questions, though, the level of strong agreement was a bit lower at 80 percent and 79 percent, respectively.

Ninety-two percent agreed that information in high-profile case files should be released once the case is closed, barring clear security risks.

At the same time, 79 percent agreed that sensitive information may need to remain secret for security or privacy. However, those strongly (40 percent) and somewhat (39 percent) agreeing were nearly equal.

Cancel Culture

Our question on cancel culture drew mixed opinions. Asked to rate the level of “cancel culture” in public life today compared to one year ago, 48 percent said it was lower. However, most of those (36 percent) said it was only somewhat lower. And 20 percent were neutral on the question.