Online platform Roblox and communications app Discord have been sued by the father of a 10-year-old girl for “recklessly and deceptively operating businesses” in a manner that allegedly led to the child’s kidnapping, according to an Aug. 5 lawsuit filed at the Superior Court of San Mateo, California.

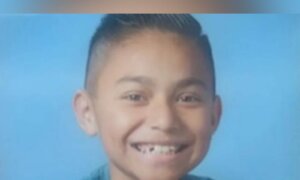

The girl, referred to as Jane Doe, was an “avid user” of Roblox and Discord for several years. Her reliance on these apps for entertainment and social interaction made her a “prime target for the countless child predators that Defendants knew were freely roaming the app looking for vulnerable children,” the complaint said. The girl is a resident of Kern County, California.

Around February 2025, a 27-year-old man targeted the girl on Roblox, posing as a child and offering friendship, the lawsuit said. Two days later, the predator allegedly shifted the communication to Discord, using the platform to build trust.

In April 2025, the man manipulated the girl to reveal her home address under the pretense of checking the distance between them, according to the complaint.

“Once the predator knew where Plaintiff lived, he drove to Plaintiff’s home and kidnapped her. He held Plaintiff against her will for an extended period, including an overnight stay in a hotel parking lot after being denied hotel accommodation,” the lawsuit said.

After intervention from law enforcement, the girl was discovered in the predator’s vehicle more than 250 miles from her home in a distressed state. She was found after being missing for more than 12 hours. The suspect has been arrested and is facing several charges, according to the lawsuit.

The complaint alleged that the girl suffered exploitation and abuse due to the lax conduct of Roblox and Discord.

According to Roblox’s 2024 annual report, the company had 82.9 million average daily active users (DAU) in 2024.

“We estimate that approximately 40 percent of our DAUs were under the age of 13 during the year ended December 31, 2024,” it said.

According to Roblox’s policy, users under the age of 13 require parental permission to access certain chat features.

Discord’s policy states that users must be at least 13 to access the app or its website. When signing up, users are asked to confirm their date of birth to create an account.

“If a user is reported as being under 13, we delete their account unless they can verify that they are at least 13 years old using an official ID document,” it said.

The lawsuit argued that even though Roblox officially requires parental consent for accepting children under the age of 13, there is nothing to prevent minors from creating their own accounts.

“Roblox does nothing to confirm or document that parental permission has been given, no matter how young a child is. Nor does Roblox require a parent to confirm the age given when a child signs up to use Roblox,” it said.

As for Discord, while the app prohibits users under 13, the company does not verify the age or identity of the sign-ups, the complaint said.

“Had Defendants implemented even the most basic system of age and identity verification, Plaintiff would never have engaged with this predator and never been harmed,” the lawsuit said.

“Plaintiff is just one of countless children whose lives have been devastated as a result of Defendants’ gross negligence and defectively designed apps. This action, therefore, is not just a battle to vindicate Plaintiff’s rights—it is a stand against Defendants’ systemic failures to protect society’s most vulnerable from unthinkable harm in pursuit of financial gain over child safety.”

The lawsuit demanded a jury trial and sought compensation for the injury allegedly suffered by the girl.

The Epoch Times reached out to Roblox and Discord for comment but did not receive a response by publication time.

Companies Under Scrutiny

An April report from research service Revealing Reality highlighted risks that Roblox poses to children.

It found that adults and children could chat on the platform with “no obvious supervision” and that age verification measures were “easily circumventable.”

“There are spaces focused on adult themes that are accessible to under 13-year-olds,” it said. “The safety controls that exist are limited in their effectiveness and there are still significant risks for children on the platform.”

According to Roblox and Discord, policies were initiated to safeguard minors on the platforms.

For instance, in November last year, Roblox announced “major updates” to safety systems and parental controls, including one allowing parents to monitor their child’s screen time from their own devices.

“We’ve updated our built-in maturity settings for our youngest users. Users under 9 can now only access ‘Minimal’ or ‘Mild’ content by default and can access ‘Moderate’ content only with parental consent. Parents still have the option to select the level they feel is most appropriate for their child,” Roblox said.

The safety announcement also said that users under 13 would no longer be able to directly message others on Roblox outside of games or platform chat.

As for Discord, the company said its safety controls allow parents to set friend request settings for children as well as block users they think are bothering their kids.

Discord is primarily used by the gaming community for related chats and coordination during gameplay.

Despite these claims, the companies face scrutiny from authorities over child safety issues.

On April 16, Florida Attorney General James Uthmeier issued a subpoena to Roblox, demanding information about the company’s child policies, including age verification and chat room moderation.

A day later, New Jersey Attorney General Matthew J. Platkin and the Division of Consumer Affairs sued Discord, according to an April 17 statement.

The lawsuit accuses the company of engaging in “deceptive and unconscionable business practices that misled parents about the efficacy of its safety controls and obscured the risks children faced when using the application.”