Artificial intelligence (AI) has been promoted as a transformational technology, promising efficiency, new discoveries, and a more productive economy.

Yet recent research and real-world results suggest a more complicated picture: Some AI systems underperform outside of controlled tests, and some researchers argue that the current large-language-model approach may not produce the expected results.

Concerns are growing also about the moral risks of AI, the impact on jobs and human judgment, and whether financial markets created an “AI bubble” detached from actual results.

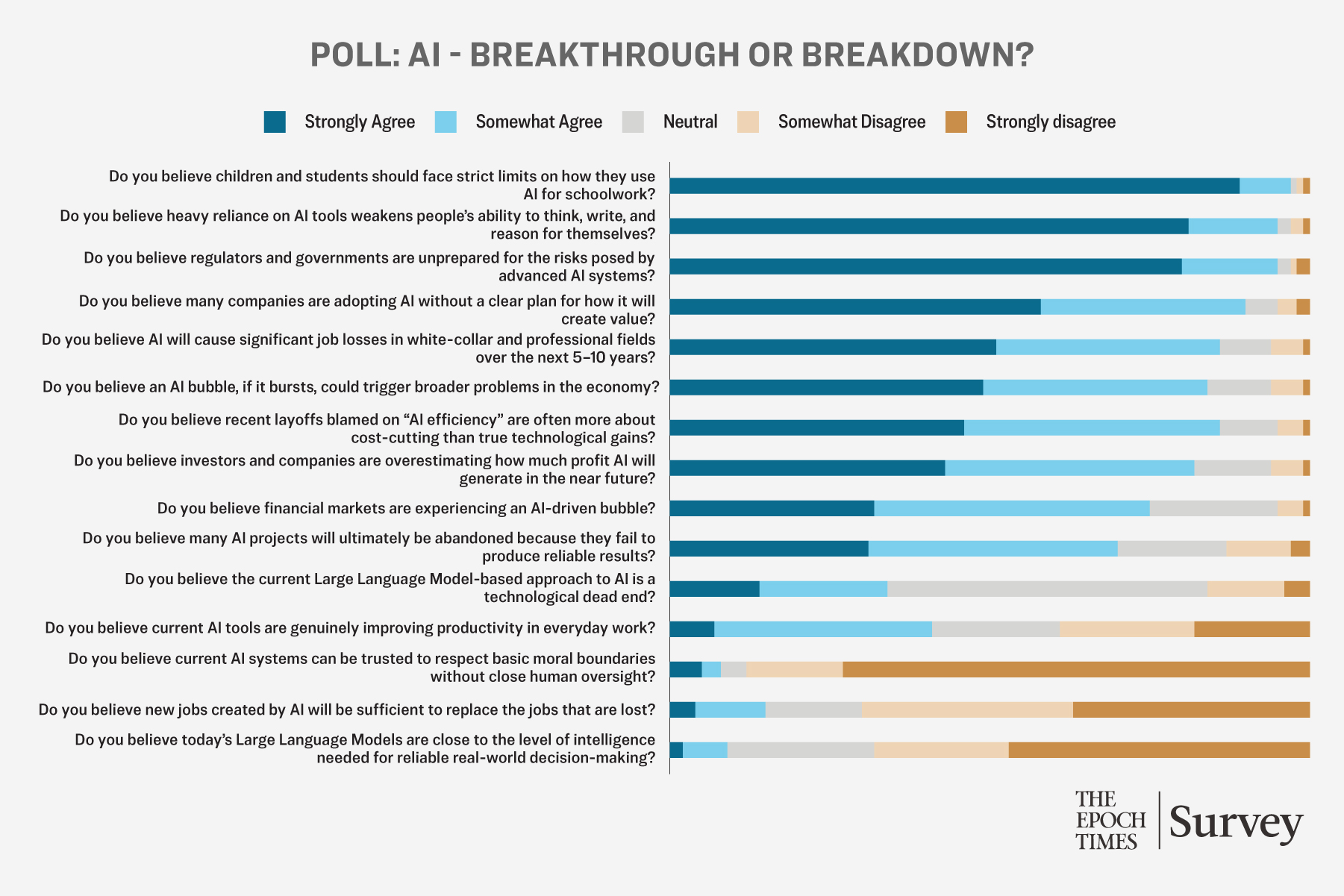

We asked readers to weigh in on the value of AI and found overall skepticism about its current value and a significant level of concern about its future.

Value

Respondents had a mostly negative view of the Large Language Model approach to developing AI.

This approach “teaches” a computer to understand and generate text based on the input it receives from a vast network of data. It amounts to pattern recognition, which enables the computer to predict the next word in a string.

This is useful for language translation, summarizing a text, answering questions, and creative writing.

Readers were split on whether current AI tools are genuinely improving productivity in everyday work. Forty-one percent thought so, 39 percent didn’t, and 20 percent were neutral on the question.

An illustration shows the ChatGPT artificial intelligence software in a file image. Forming romantic or platonic ties with “AI companions” has become increasingly common among tens of thousands of AI chatbot users. (Nicolas Maeterlinck/Belga Mag/AFP via Getty Images)

Ultimately, most readers (70 percent) agreed that many AI projects will ultimately be abandoned because they fail to produce reliable results.

More than two-thirds (68 percent) did not believe today’s Large Language Models are close to the level of intelligence needed for reliable real-world decision-making.

Half of respondents (50 percent) were unsure whether the Large Language Model approach would prove to be a dead end, and 34 percent thought it would.

Implementation

Respondents were cautious about how companies, individuals, and especially children should approach AI.

The vast majority (90 percent) agreed that many companies are adopting AI without a clear plan for how it will create value.

A higher percentage (95 percent) believe heavy reliance on AI tools weakens people’s ability to think, write, and reason for themselves.

That belief may be confirmed by a study from the Massachusetts Institute of Technology (MIT), which asked three groups of people to compose essays. The group assigned to use OpenAI’s ChatGPT showed lower brain engagement and “consistently underperformed at neural, linguistic, and behavioral levels” than the groups assigned to use Google or no AI tools at all for the same task.

In our survey, an overwhelming 97 percent of respondents agreed that children and students should face strict limits on how they use AI for schoolwork.

The Massachusetts Institute of Technology campus in Cambridge, Mass., on Oct.18, 2025. (Learner Liu/The Epoch Times)

Impact on Employment

Jobs become expendable when AI can perform most of the tasks associated with them, according to a study coauthored by Lawrence Schmidt of MIT. However, when AI is assigned just some of the tasks, employment in the role can grow as humans are left to focus on ideation or critical thinking, the study concluded.

Even so, our readers were highly pessimistic about the impact of AI on employment.

Eighty-six percent believed AI will cause significant job losses in white-collar and professional fields over the next five to 10 years.

However, the same number (86 percent) believed recent layoffs attributed to “AI efficiency” are often more about cost-cutting than true technological gains.

A mere 15 percent thought new jobs created by AI would be sufficient to replace the jobs that are lost, compared with 70 percent who did not.

Regulation, Economy

Respondents were also concerned about an AI bubble and its potential effect on the economy.

Some $30 billion to $40 billion has been invested in generative AI, but 95 percent of organizations have received no return on investment, according to a recent study by MIT.

Three-quarters (75 percent) of our respondents believe financial markets are experiencing an AI-driven bubble.

A trader monitors financial data on the floor of the New York Stock Exchange on Oct. 1, 2025. U.S. stocks fell on Oct. 10 over concerns that China’s new rare earth export controls could hurt growth at AI companies. (Seth Wenig/AP Photo)

Even more (82 percent) believe investors and companies are overestimating how much profit AI will generate in the near future. And 84 percent agreed that an AI bubble, if it bursts, could trigger broader economic problems.

The highest number, 95 percent, thought regulators and governments are unprepared for the risks posed by advanced AI systems.

Concerns

Eighty-eight percent of respondents did not trust AI systems to respect basic moral boundaries without close human oversight.

Although AI systems appear to interact as human beings, they do not understand the content they process, according to Stanford University.

They sometimes create plausible-sounding answers that are incorrect. And they amplify the biases or points of view within the materials used to “train” them.

Readers voiced an array of concerns about AI. Moral questions about the technology were most prominent.

“AI cannot be taken at face value because it does not subscribe to any moral standard,” one reader wrote.

Another said, “AI is amoral right now, as it is given access to all of the available data. It can be manipulated by restricting the available data.”

An illustration of Anthropic, an American AI company, on Aug. 1, 2025. Michal Prywata warns that published AI-fabricated data can train new models, creating a misinformation feedback loop. (Riccardo Milani/Hans Lucas/AFP via Getty Images)

“AI is spiritually void and so morally handicapped. Human problems require human perspectives,” another wrote.

Readers also wrote about the danger of losing personal freedom due to the possible misuse of AI.

“AI is used for monitoring everything. There will be no privacy anywhere,” one said.

Another said, “My biggest concern is not being able to control it.”

Readers listed many other concerns with the technology, including the loss of critical thinking, the dumbing down of the population, the introduction of bias, a loss of human creativity, and a negative developmental impact on children.

One reader advised a balanced approach to this technology.

“AI, if properly used, can positively affect a wide range of industries,” the reader said. “However, it will cause an equal amount of problems as it resolves.”